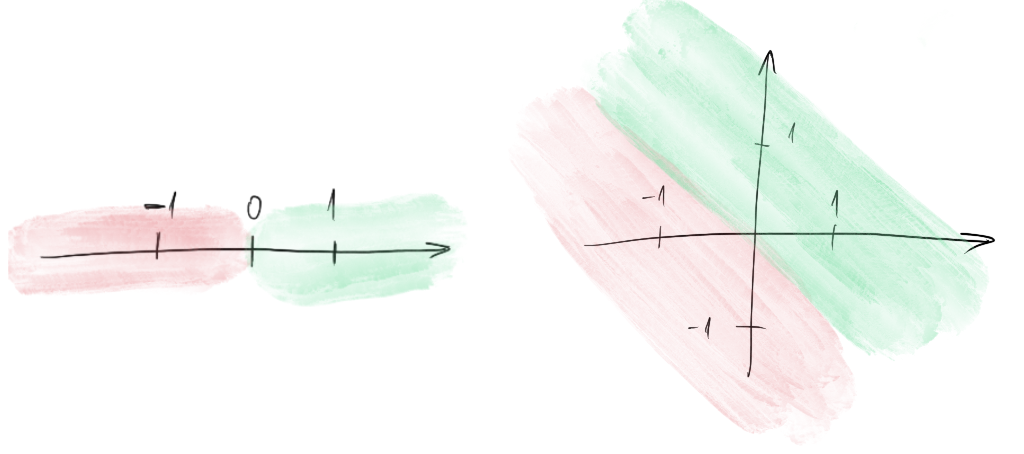

Understanding shapes is an integral part of demystifying how data gets passed around in your model. If you are unfamiliar with shapes, reading my previous post, Shapes – an introduction, might help. In this post, I’ll explore how Tensorflow expects to receive data, and hopefully provide a better intuition for how to conceptualise your data pipeline.

We usually encounter shapes when passing tensors around. All of us have been confused by shape-related complaints coming from our ML framework of choice when first building our own datasets.

Let’s dive directly into a common situation: we’re starting with Tensorflow, trying to train a simple perceptron to predict 1 when we give it 1 as input:

import tensorflow as tf # define our input and output # when asked to predict 1, we train it to output 1 x = 1 y = 1 # create model, we use the keras sequential model model = tf.keras.Sequential() # a perceptron in keras is a Dense layer with 1 neuron perceptron = tf.keras.layers.Dense(1) # add it to the model model.add(perceptron) # compile the model to use mean squared error as the loss function model.compile(loss="mse") # finally, train it model.fit(x,y)

IndexError: tuple index out of range

This fails. The error message is cryptic, but the answer is simple: Tensorflow always expects a batch whenever it does anything. The scalar we provided has no shape at all. The solution is to transform it into a batch by adding the number to a list. A batch of one number will have the shape (1,). This looks funny since it has a comma but only one number, but it’s because a tuple cannot be defined using a single number otherwise. Here’s the fix to get the previous code training:

x = [1] y = [1]

Train on 1 samples 1/1 [==============================] - 0s 115ms/sample - loss: 3.8462

Now we’re telling Tensorflow to fit on a batch of one training example and the corresponding one label. This, however, is a nicety of Tensorflow. It’s kind enough to let us ignore the proper shape that we should be using. Let’s expand the example to a more illustrative case in which we have multiple items in our dataset:

x = [-1,-1,1,1] y = [0,0,1,1]

This is still fine and Tensorflow does not complain. In practice, however, we very rarely have single-dimensional data as inputs.

The problem is obvious now. We clearly need an input of shape (Batch_Size, 2) if each input has two dimensions.

x=[[-1,0],[0,-1],[0,1],[1,0]] y=[0, 0, 1, 1]

It’s, therefore, a good idea to always keep the proper shapes, if not for Tensorflow’s sake, for us to better remember what role each dimension plays. The following x of shape (4,1) still runs:

x = [[1],[2],[3],[4]]

RNNs and CNNs

This is where many, including myself, have first encountered the shape problem. Let’s first focus on RNNs, then I’ll post the CNN code later. The problems and solutions are identical.

RNNs

Let’s define a simple RNN model that takes 4 time steps of one value each and predicts a scalar.

import tensorflow as tf

x=[

[1,2,3,4],

[4,3,2,1]

]

y=[0, 1]

model = tf.keras.Sequential()

rnn = tf.keras.layers.LSTM(1)

model.add(rnn)

perceptron = tf.keras.layers.Dense(1)

model.add(perceptron)

model.compile(loss="mse")

model.fit(x,y)

ValueError: Input 0 of layer sequential is incompatible with the layer: expected ndim=3, found ndim=2. Full shape received: [None, 4]

We finally get Tensorflow to complain. If this were using the same logic as the previous Dense-only code, it would happily understand that we’re trying to get it to take a sequence of four numbers as the recurrent time steps. Alas, we need to be more precise. Let’s reshape the input:

x = tf.reshape(x, (-1, 4, 1)) # tf.Tensor([[[1][2][3][4]][[4][3][2][1]]], shape=(2, 4, 1), dtype=int32)

ValueError: Failed to find data adapter that can handle input: <class 'tensorflow.python.framework.ops.EagerTensor'>, (<class 'list'> containing values of types {"<class 'int'>"})

Another cryptic error message is thrown at us. It’s telling us that it can’t handle a list input of type int. Eagle-eyed readers would have noticed the reshape operation having output a tensor of dtype int32. However, this error message actually complains about y! We fix this error by converting y to a tensor beforehand, a good general practice to follow:

y=tf.constant([0, 1])

TypeError: Input 'b' of 'MatMul' Op has type float32 that does not match type int32 of argument 'a'.

A final error! There’s a matrix multiplication operation that received int values, even though it expected a float. This could theoretically come from the loss function, but it’s more likely it’s from the feed-forward pass. We already saw that x has type int32, so let’s try changing that to float. We use the tensorflow cast function. This runs, so here’s the entire code:

import tensorflow as tf

x=[

[1,2,3,4],

[4,3,2,1]

]

y=tf.constant([0, 1])

x = tf.reshape(x, (-1, 4, 1))

x = tf.cast(x, tf.float32)

model = tf.keras.Sequential()

rnn = tf.keras.layers.LSTM(1)

model.add(rnn)

perceptron = tf.keras.layers.Dense(1)

model.add(perceptron)

model.compile(loss="mse")

model.fit(x,y)

Train on 2 samples 2/2 [==============================] - 1s 370ms/sample - loss: 0.7737

CNNs

Here’s a simple convolutional model facing the same problem as the recurrent example previously shown. Fixing it is left as an exercise to the reader.

import tensorflow as tf

x=[

[1,2,3,4],

[4,3,2,1]

]

y=[0, 1]

model = tf.keras.Sequential()

conv = tf.keras.layers.Conv1D(1,1)

model.add(conv)

model.add(tf.keras.layers.Flatten())

perceptron = tf.keras.layers.Dense(1)

model.add(perceptron)

model.compile(loss="mse")

model.fit(x,y)

Summary

I hope this post has managed to clear up some confusion regarding input shapes in Tensorflow, which often cause trouble to newcomers. Thanks for reading!